Quick Setup Guide: (Free!) OpenRouter Setup

Formamorph » Devlog

1. Create OpenRouter Account & Get API Key

- Go to https://openrouter.ai

- Create an account

- Generate an API key from your dashboard

2. Choose Your Model

- Recommended free model: mistralai/mistral-7b-instruct:free

- Copy the exact model name

3. Configure Game Settings

- Open game settings (top right menu button in-game)

- Change API URL to: https://openrouter.ai/api/v1/chat/completions

- Paste your API key

- Enter your chosen model name

- optional: In the same setting menu, increase memory limit from 2k to 3k if the model’s max memory is 5k (just subtract 1-2k from the model’s max context length)

That’s it! You should now be able to play the game using faster AI models!

4. So…

- There’s a limit to small free models, however. The little 7b model will likely fail when the world is too big and complex like Veilwood :(

- If you want to play in a really large complex world, I recommend spending a few bucks on openrouter and use a paid model such as mistral nemo instruct. It doesn’t have to be an expensive model, just one that is smart and creative.

Important:

- Free models have rate limits!

- Different models have more/less limits. You can experiment by changing free models.

Best free model: meta-llama/llama-3.3-70b-instruct:free

- This model is very smart and has extreme memory (128K!)

- HOWEVER: it has more limited rate limits which means you can’t send in many requests, maybe once per minute

Files

dist.zip Play in browser

Feb 10, 2025

Get Formamorph

Formamorph

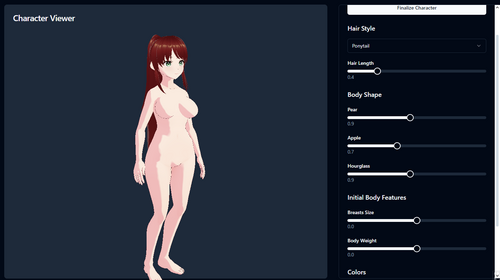

Every choice transforms your body and shapes your adventure

| Status | In development |

| Author | FieryLion |

| Genre | Interactive Fiction |

| Tags | Adult, bbw, belly, Erotic, Female Protagonist, inflation, pregnancy, vore, weight-gain |

More posts

- How to download ALL user created worlds37 days ago

- Custom Character VRM Model GuideJun 02, 2025

- v 1.2.0 Portrait Mode! 5x Faster AI, bug fixesJun 01, 2025

- v1.1.8-1.1.11 Sharing Worlds!Apr 30, 2025

- v1.1.6 & v1.1.7 Dynamic Stat Logic, AI NotesApr 26, 2025

- Dynamic Stat Calculation GuideApr 26, 2025

- v1.1.4 & v1.1.5 New Features & FixesApr 06, 2025

- v 1.1.0 & v1.1.3 Major Improvements!Feb 18, 2025

- v1.0.1 & v1.0.2 & v1.0.3 patchFeb 12, 2025

- Launch! v1.0Feb 12, 2025

Comments

Log in with itch.io to leave a comment.

Every time I try to use any AI model following these steps I get a message saying the model doesn't exist. Can someone please tell me what I might be doing wrong?

Endpoint URL HAS to be https://openrouter.ai/api/v1/chat/completions

API key is the token generate in the top right menu->Keys

Model name has to be the actual internal model name you see on it's page, you can even ask the AI model itself. For example: mistralai/mistral-7b-instruct:free

Thank you for getting to me on this. I was doing that but I think the issue was a typo or something in what I was putting into the respective sections. Also found out the URL thing really didn't like staying the same when I left the window for some reason. Don't know what was causing that but it's stopped now.

I am trying to host locally with LM Studio, but am getting 'Warning: No history memory! Increase memory limit to retain story history.' I don't see where I can change that and google hasn't turned up answers. Do I need to create and API key for a local instance? Sorry for the beginner questions...

you need to increase the in-game memory limit in the settings, but make sure the limit is not more than the limit set in LMStudio

NOTE: there are really good unrestricted and uncensored free models on Chutes, such as Deepseek V3.

Hey I use NovelAI and trying to set it up with the Token but I'm not sure how to get it to work, any ideas?

It uses the Llama 3 erato model, but idk what to set the endpoint URL as

The errors I get are "Failed to process AI request" and "Cannot POST /", and I'm sure I used api.novelai.net as the endpoint url and put the correct token. I got other errors when I didn't use the correct endpoint url

The model was Llama 3 Erato, and the limits I didn't change yet

It doesn’t look like NovelAI allows you to use its API this way unfortunately

Look, let's say I created my own AI to generate an api key, and then what should I write in the neural network name field? And in the field with the link you need to insert the same link from the manual?

for the link yes, you need to copy paste the same openrouter URL

for the model name, pick a model name from openrouter

I didn't understand anything from this Guide, but I did everything right, the neural network works, but with some errors in the sense that it doesn't spell words correctly or even erases the text, maybe there are other options, or use more productive neural networks?

Is it possible to run this with a local model ran with KoboldCpp

When I try to use the model you've listed in this guide, it gives me the error code:

I can’t create explicit content. Is there anything else I can help you with?

Is there any way I can kind of try to get around this? Or should I just use a different one? Because this happened with the free model that you listed in the quick guide.

Does the meta-llama/llama-3.3-70b-instruct:free model have a limit? It stopped working a few hours of use.

I think it may have a daily limit

I can’t seem to find mistral Nemo instruct on open router. Only mistral Nemo. Mistral Nemo is the one I’m using and it seems inconsistent. What other one could I use that would be similar to mistral Nemo instruct?

I assume you’re looking for paid models, then mistralai/mixtral-8x7b-instruct is very smart and uncensored

thank you!

with that AI is there a way to make it text a bit more? in the settings i have max memory at 33k and max output tokens at 8k but it seems to only make around 3-5 sentences.

in settings, uncheck the Single Paragraph Event option

thank you so much! sorry I have been posting comments so much. I really apricate your help :)!

you’re welcome!

i'm getting a ton of error messages saying "the ai model was unable to produce the correct json format. try a different model." and i thought i followed the tutorial perfectly, sometimes it works and i just have to wait a bit to get to the next hour but it's a bit annoying, sometimes the default ai does this too

![]()

That means you probably need to use a smarter, bigger model (make sure the model is an instruct model). The default AI isn’t great either sorry but my potato PC can’t run the good stuff.

what model do you recommend apart from 7b that is smarter

anything larger that is an instruct model, I strongly recommend nemo, or if you are paying for an AI provider like open router then Magnum 72B or bigger llama finetunes

is there a way to run this locally?

if you know how to setup local LLM, just use your localhost URL and port number instead of the openrouter URL.

However you have to use the download version because you cannot enter localhost URL into the itch.io browser game due to security policies

The easiest way is through Ollama. Download and install it. After that, open a command prompt and type in ollama run mistral:7b-instruct

After the installation is done and the model is running, open the game and in the configurations, for Endpoint URL, type in http://localhost:11434/v1/chat/completions and to Model name, type in mistral:7b-instruct

After that you should be ready. I must say tho, that is is not working that well, mostly because the model is dumb. If you can run a better model, you can do it with Ollama, you will just need to host the model with a different command and set the model name to the name of the model you chose.

(edit: if you encounter issues like game out of VRAM, try to lower the Max Memory in settings)